Having used 3dSynthesize to create a dataset with the effects of no interest, we are now ready to subtract those effects from the dataset that went into 3dDeconvolve; for you players using the AFNI_data6 tutorial data, this is the all_runs.FT+orig dataset. We can use 3dcalc for this operation:

3dcalc -a all_runs.FT+orig -b effectsNoInterest+orig -expr 'a-b' -prefix cleanData+orig

This will create a time series with all the drift correction and motion parameter schmutz subtracted out and cast into the pit. What is left over will be signal untainted by motion or drift effects, which we can then use to generate a seed time series.

Before that, however, there is the additional step of warping our data to a template space (such as MNI or Talairach). Assuming you have already warped your anatomical dataset to a template space either manually or using @auto_tlrc, and assuming that there is already good alignment between your anatomical and functional data, you can use the command adwarp:

adwarp -apar anat_final.FT+tlrc -dpar cleanData+orig -dxyz 3 -prefix EPI_subj_01

(Note: There are better ways to do warping, such as the warping option built into align_epi_anat.py (which calls upon @auto_tlrc), but for pedagogical purposes we will stick with adwarp.)

Once this is done, all we need to do is select a seed region for our functional connectivity analysis. This could be a single voxel, a region of interest that averages over an entire area of voxels, or a region selected based on a contrast or an anatomical landmark; it depends on your research question, or whether you want to mess around and do some exploratory analyses. For now, we will use a single voxel using XYZ coordinates centered on the left motor cortex (note that, in this coordinate system, left is positive and posterior is positive):

3dmaskdump -noijk -dbox 20 19 53 EPI_subj_01+tlrc > ideal_ts.1D

Because 3dmaskdump outputs a single row, we will need to transpose this into a column:

1dtranspose ideal_ts.1D LeftMotorIdeal.1D

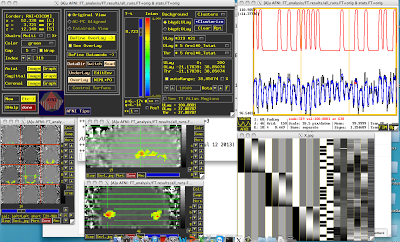

After you have output your ideal timeseries, open up the AFNI viewer and make sure that what was output matches up with the actual timeseries. In this example, set EPI_subj_01+tlrc as an underlay and right click in the slices viewer to jump to XYZ coordinates of 20, 19, 53; open up the timeseries graph as well, and scroll to the first time point. Does the value there match up with the first entry of your ideal time file? If not, you may have mis-specified the voxel you wanted, or you are using a different XYZ grid than is displayed in the upper left corner of the AFNI viewer. If you run into this situation, consult your local AFNI guru; if you don't have one of those, well...

In any case, we now have all the ingredients for the functional connectivity analysis, and we're ready to pull the trigger. Assemble your materials using 3dfim+ (which, as far as I can understand, stands for "functional intensity map," or something like that):

3dfim+ -polort 3 -input EPI_subj_01+tlrc -ideal_file LeftMotorIdeal.1D -out Correlation -bucket Corr_subj01

You will now have a dataset, Corr_subj_01+tlrc, which is a functional connectivity map between your seed region and the rest of the brain. Note that the threshold slider will now be in R values, instead of beta coefficients or t-statistics; therefore, make sure the order of magnitude button (next to the '**' below the slider) is 0, to restrict the range between +1 and -1.

The last step is to convert these correlation values into z-scores, to reduce skewness and make the distribution more normal. This is done through Fisher's R-to-Z transformation, given by the formula z

= (1/2) * ln((1+r)/(1-r)). Using 3dcalc, we can apply this transformation:

3dcalc -a Corr_subj01+tlrc -expr 'log((1+a)/(1-a))/2' -prefix Corr_subj01_Z+tlrc

Those Z-maps can then be entered into a second-level design just like any other, using 3dttest+ or some other variation.

Sanity check: When viewing the correlation maps, jump to your seed region and observe the r-value. It should be 1, which makes sense given that this was the region we were correlating with every other part of the brain, and correlating it with itself should be perfect.

Like me.