In a desperate attempt to make myself look cool and connected, on my lab webpage I wrote that my research

...focuses on the application of fMRI and computational modeling in order to further understand prediction and evaluation mechanisms in the medial prefrontal cortex and associated cortical and subcortical areas...

Lies. By God, lies. I know as much about computational modeling as I do about how Band-Aids work or what is up an elephant's trunk. I had hoped that I would grow into the description I wrote for myself; but alas, as with my pathetic attempts to wake up every morning before ten o'clock, or my resolution to eat vegetables at least once a week, this also has proved too ambitious a goal; and slowly, steadily, I find myself engulfed in a blackened pit of despair.

Computational modeling - mystery of mysteries. In my academic youth I observed how cognitive neuroscientists outlined computational models of how certain parts of the brain work; I took notice that their work was received with plaudits and the feverish adoration of my fellow nerds; I then burned with jealousy upon seeing these modelers at conferences, mobs of slack-jawed science junkies surrounding their posters, trains of odalisques in their wake as they made their way back to their hotel chambers at the local Motel 6 and then proceeded to sink into the ocean of their own lust. For me, learning the secrets of this dark art meant unlocking the mysteries of the universe; I was convinced it would expand my consciousness a thousandfold.

I work with a computational modeler in my lab - he is the paragon of happiness. He goes about his work with zest and vigor, modeling anything and everything with confidence; not for a moment does self-doubt cast its shadow upon his soul. He is the envy of the entire psychology department; he has a spring in his step and a knowing wink in his eye; the very mention of his name is enough to make the ladies' heads turn. He has it all, because he knows the secrets, the joys, the unbounded ecstasies of computational modeling.

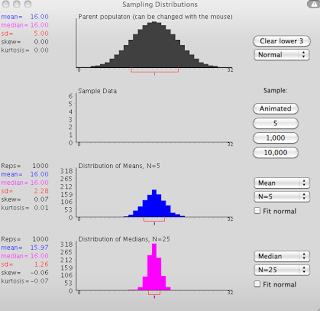

Desiring to have this knowledge for myself, I enrolled in a class about computational modeling. I hoped to gain some insight; some clarity. So far I have only found myself entangled in a confused mess. I hold onto the hope that through perseverance something will eventually stick.

However, the class has provided useful resources to get the beginner started. A working knowledge of the electrochemical properties of neurons is essential, as is modeling their effects through software such as Matlab. The

Book of Genesis is a good place to get started with sample code and to catch up on the modeling argot; likewise, the

CCN wiki over at Colorado is a well-written introduction to the concepts of modeling and how it applies to different cognitive domains.

I hope that you get more out of them than I have so far; I will post more about my journey as the semester goes on.