There comes a time when a graduate student is selected for the dubious honor of reviewing an article. This is the "peer-review process," where your academic equals, also known as "peers," or, less commonly, "fellow nerds," pass "judgment" on an "article" to determine if it is "suitable" for "publication." Of course, actually recommending an article for publication rarely "happens," and it is much more common for an article to be "rejected," similar to what happens every other area in your life, such as "work" or "dating."

Clearly, then, publishing articles is a formidable process, with failure all but certain. Because of this, instead of merely relying on the quality and scientific integrity of their work to make it publication-worthy, scientists sometimes resort to other tactics, by which I mean "bribes."

By bribes, I do not mean simply asking for money in exchange for recommending an article for publication. That would be dishonest. And also be careful not to confuse bribes with "extortion," which is also known in certain circles as "publication fees."

Instead, the transfer of money between article authors and reviewers is much more subtle. I should add that if you feel any qualms about asking for bribes, keep in mind that this entire process is implied and condoned, similar to insider trading, cheating at golf, or using your finger to scrape the last bits of Nutella from the bottom of the jar. Everybody does it.

In order to request a bribe through your journal review, however, you need to exercise the utmost caution and tact, carefully placing references to your account number, routing number, and the amount of money that you want, that will only be detected by those who are "in the know." Use the following template for your own reviews.

Reviewer #1: This paper is an interesting, timely, important study on the effects of the default poop network. However, before I recommend it for publication, I have some major reservations, which in no way include asking for bribes.

Major issues:

1) The name of the second author, Betsy Honkswiggle, is identical to the name of a girl that I dated during my sophomore year in college, and I still have particularly bad memories about our breakup, which may or may not have involved a nasty custody struggle over a pet iguana. The negative associations are affecting my ability to objectively review this paper, and I recommend either immediate removal of the author from the paper, or that the author change her name to something more palatable, such as Harriet Beecher Stowe, or Pamela Anderson.

2) In figure 3557285492, the colors used to depict different effects could be changed to be more pleasing to the eye. Right now they are in blue and green, which is somewhat drab; try a different set of colors, such as fuschia or hot lemon.

Minor issues:

1) I realize this may not entirely be the authors' fault, but I have been having some serious itching for the past couple of weeks, which I, for decorum purposes, won't go into more detail about where it is located. I've tried everything, from Tucks to half-and-half to primal scream therapy, but nothing seems to work. Do the authors have any recommendations for how to deal with this? Thanks!

2) When discussing the default poop network, please cite Dr. Will Brown's seminal 1994 paper.

3) For that matter, please cite the other following papers, which are related to your article. Of course, you don't need to cite

all of these, but if you didn't, it'd be a shame if somethin' were to, you know, happen to this nice little article of yours,

capisci?

-Fensterwhacker et al, 2011

-Fensterwhacker & Brown, 2009

-Fensterwhacker et al, in press

-Fensterwhacker et al, submitted

-Fensterwhacker & Honkswiggle, in prep

-Fensterwhacker, Bickswatter, & LeQuint, I swear we're very seriously considering doing this study

4) The fact that my name is on all of the preceding citations is purely a coincidence.

5) Also, if you believe that, you are, with all due respect, dumber than tinfoil.

6) On page 12, line 20, "your" should be "you're."

7) On page 16, the authors report an effect that has a cluster size of 348 voxels. This seems a little off to me; for some reason, I think this should be something more like, let's say, 017000371. This must be true, because I am a reviewer.

Once you have addressed all of these concerns, I may allow you to do a second round of reviews, after which I may go and do something completely nuts-o, such as recommending a reject and resubmit.

God bless you all,

Except for the atheists,

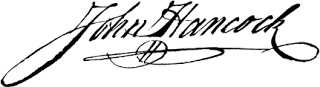

Dwayne "Five Thousand Bucks" Fensterwhacker III, Esq.