This is, barring any unforeseen circumstances, such as meteor strike, death by Nutella overdose, or floss strangulation, my last summer of graduate school at Indiana University; and I will be spending the majority of that time working on my dissertation to escape this durance vile.

However, I have also been thinking (which should worry you), and it appears to me that what this site has become is a pastiche of training and tutorials which deal with small, isolated problems: How to do this for your data, what it means, and then moving on to the next, often without a clear link between the two, and often with a joke or two thrown in for good measure as a diversion to briefly suspend the terror that haunts your guilt-ridden minds. No doubt some come here only to receive what goods they need and then ride on, I no more than a digital manciple supplying a growing, ravenous demand for answers. Some may come here to merely carp and backbite at my charlatanry; others out of a morbid curiosity for ribald stories and for whatever details about my personal life they can divine; and still others, perhaps, see something here that warms something inside of them.

What is needed, however, is a focus on what ties them all together; not only technique and methods, but ways of thinking that will stand you in good stead for whatever you wish to figure out, neuroimaging or otherwise. And, as new wine requires new bottles, a higher-order website is needed to serve as a hub where everything that I have created, along with other materials, lies together; where one is able to quickly find the answers that they need, whether through videos or links to the appropriate tools tools and scripts, along with educational material about the most important concepts to grasp before moving forward with a particular problem. Problem-solution flowcharts, perhaps. Applets that quiz you and record scores, possibly. And, of course, links to my haberdashers. (You think I am making a fortune? Ascots and cufflinks cost more, and without them one would not be in good taste.)

So much for exhortations. Actually putting this into practice is the difficult part, and is an effort that could, quite possibly, kill me from exhaustion, or at least cause me to procrastinate endlessly. We shall see. At least I have taken a few steps towards starting, which includes looking at some HTML code and prodding at it thoughtfully through the computer screen with my finger. I'm not exactly sure how HTML works; but at least whenever I am working with it, I take pains to look very concerned and deep in thought.

However, before this endeavor there are still a few matters left to discuss, which I had earlier promised but still haven't delivered yet. In some states I would be hanged for such effrontery; but your continued patience means that either 1) You really, really don't want to read the manual (and who can blame you?), or 2) You find it worth the wait. Regardless, and whatever my behavior suggests to the contrary, I do want to help out with these concepts, but I do not always get to everyone's questions. Sometimes too much time passes, and they are gradually swept into the vast oubliette of other stuff I tend to forget; other times, some emails do in fact miss me, and aren't opened until much later, when it would seem too late to reply. In any case, I apologize if I have forgotten any of you. Below is a brief schedule of what I plan to cover:

1. Independent Components Analysis with FSL

2. Beta Series Analysis in SPM

3. Explanation of GLMs

4. Multiple Regression in SPM

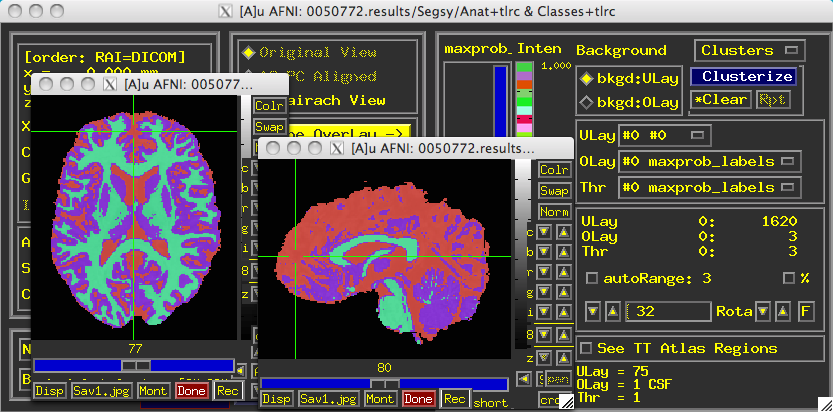

5. MVPA in AFNI

6. An explanation of GSReg, and why it's fashionable to hate it, using example data on the AFNI website

7. Overview of 3dTproject

And a couple of minor projects which I plan to get to at some point:

1. Comparison of cluster correction algorithms in SPM and AFNI

2. How to simulate Kohonen nets in Matlab, which will make your nerd cred go through the roof

We will start tomorrow with Independent Components Analysis, a method considerably different from seed-based analysis, starting with the differences between the two and terminating at a destination calamitous beyond all reckoning; and then moving on to Beta Series analysis in SPM, which means doing something extremely complicated through Matlab, and plagiarizing code from one of my mentors; and then the rest of it in no set order, somewhere within the mists beyond right knowing where the eye rolls crazily and the lip jerks and drools.