Mankind craves unity - the peace that comes with knowing that everyone thinks and feels the same. Religious, political, social endeavors have all been directed toward this same end; that all men have the same worldview, the same Weltanschauung. Petty squabbles about things such as guns and abortion matter little when compared to the aim of these architects. See, for example, the deep penetration into our bloodstream by words such as equality, lifestyle, value - words of tremendous import, triggering automatic and powerful reactions without our quite knowing why, and with only a dim awareness of where these words came from. That we use and respond to them constantly is one of the most astounding triumphs of modern times; that we could even judge whether this is a good or bad thing has already been rendered moot. Best not to try.

It is only fitting, therefore, that we as neuroimagers all "get on the same page" and learn "the right way to do things," and, when possible, make "air quotes." This is another way of saying that this blog is an undisguised attempt to dominate the thoughts and soul of every neuroimager - in short, to ensure unity. And I can think of no greater emblem of unity than the normal distribution, also known as the Z-distribution - the end, the omega, the seal of all distributions. The most vicious of arguments, the most controversial of ideas are quickly resolved by appeal to this monolith; it towers over all research questions like a baleful phallus.

There will be no end to bantering about whether to abolish the arbitrary nature of p less than 0.05, but the bantering will be just that. The standard exists for a reason - it is clear, simple, understood by nearly everyone involved, and is as good a standard as any. A multitude of standards, a deviation from what has become so steeped in tradition, would be chaos, mayhem, a catastrophe. Again, best not to try.

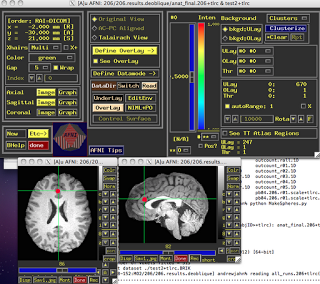

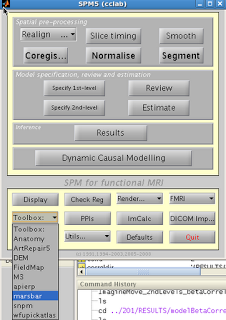

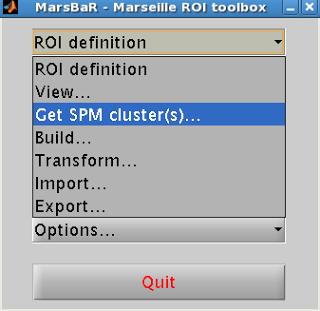

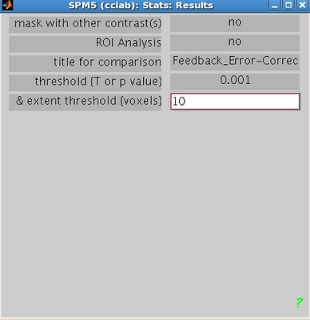

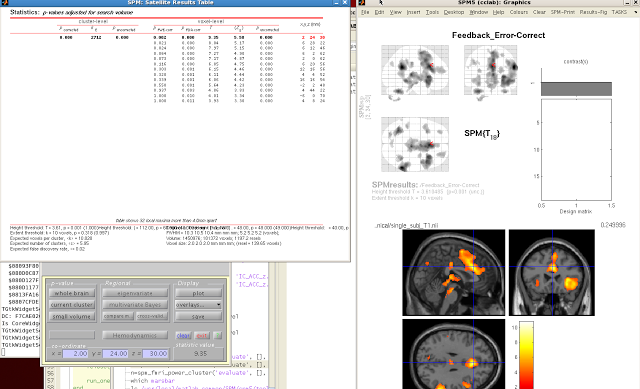

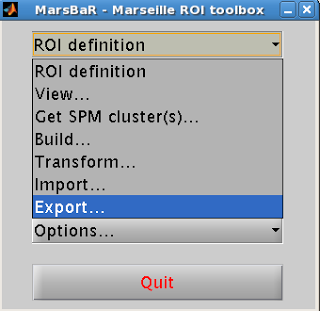

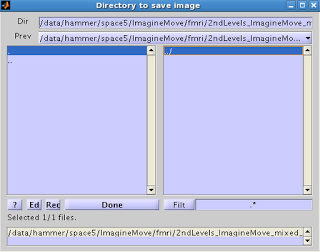

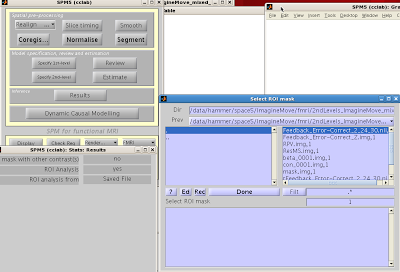

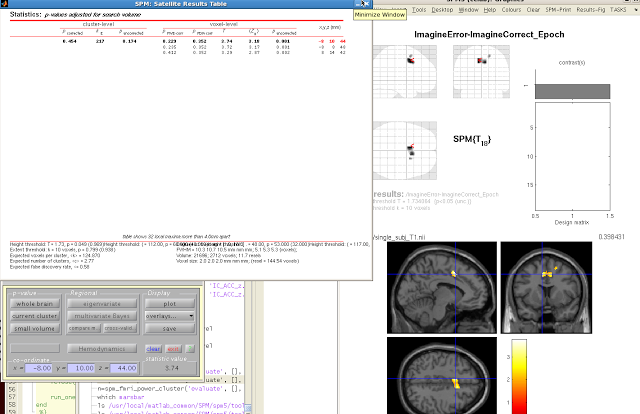

I wish to clear away your childish notions that the Z-distribution is unfair or silly. On the contrary, it will dominate your research life until the day you die. Best to get along with it. The following SPM code will allow you to do just that - convert any output to the normal distribution, so that your results can be understood by anyone. Even by those who disagree, or wish to disagree, with the nature of this thing, will be forced to accept it. A shared Weltanschauung is a powerful thing. The most powerful.

The following Matlab snippet was created by my adviser, Josh Brown. I take no credit for it, but I use it frequently, and believe others will get some use out of it. The calculators in each of the major statistical packages - SPM, AFNI, FSL - all do the same thing, and this is merely one application of it. The more one gets used to applying these transformations to achieve a desired result, the more intuitive it becomes to work with the data at any stage - registration, normalization, statistics, all.

%

% Usage: convert_spm_stat(conversion, infile, outfile, dof)

%

% This script uses a template .mat batch script object to

% convert an SPM (e.g. SPMT_0001.hdr,img) to a different statistical rep.

% (Requires matlab stats toolbox)

%

% Args:

% conversion -- one of 'TtoZ', 'ZtoT', '-log10PtoZ', 'Zto-log10P',

% 'PtoZ', 'ZtoP'

% infile -- input file stem (may include full path)

% outfile -- output file stem (may include full pasth)

% dof -- degrees of freedom

%

% Created by: Josh Brown

% Modification date: Aug. 3, 2007

% Modified: 8/21/2009 Adam Krawitz - Added '-log10PtoZ' and 'Zto-log10P'

% Modified: 2/10/2010 Adam Krawitz - Added 'PtoZ' and 'ZtoP'

function completed=convert_spm_stat(conversion, infile, outfile, dof)

old_dir = cd();

if strcmp(conversion,'TtoZ')

expval = ['norminv(tcdf(i1,' num2str(dof) '),0,1)'];

elseif strcmp(conversion,'ZtoT')

expval = ['tinv(normcdf(i1,0,1),' num2str(dof) ')'];

elseif strcmp(conversion,'-log10PtoZ')

expval = 'norminv(1-10.^(-i1),0,1)';

elseif strcmp(conversion,'Zto-log10P')

expval = '-log10(1-normcdf(i1,0,1))';

elseif strcmp(conversion,'PtoZ')

expval = 'norminv(1-i1,0,1)';

elseif strcmp(conversion,'ZtoP')

expval = '1-normcdf(i1,0,1)';

else

disp(['Conversion "' conversion '" unrecognized']);

return;

end

if isempty(outfile)

outfile = [infile '_' conversion];

end

if strcmp(conversion,'ZtoT')

expval = ['tinv(normcdf(i1,0,1),' num2str(dof) ')'];

elseif strcmp(conversion,'-log10PtoZ')

expval = 'norminv(1-10.^(-i1),0,1)';

end

%%% Now load into template and run

jobs{1}.util{1}.imcalc.input{1}=[infile '.img,1'];

jobs{1}.util{1}.imcalc.output=[outfile '.img'];

jobs{1}.util{1}.imcalc.expression=expval;

% run it:

spm_jobman('run', jobs);

cd(old_dir)

disp(['Conversion ' conversion ' complete.']);

completed = 1;

Assuming you have a T-map generated by SPM, and 25 subjects that went into the analysis, a sample command might be:

convert_spm_stat('TtoZ', 'spmT_0001', 'spmZ_0001', '24')

Note that the last argument is degrees of freedom, or N-1.

It is only fitting, therefore, that we as neuroimagers all "get on the same page" and learn "the right way to do things," and, when possible, make "air quotes." This is another way of saying that this blog is an undisguised attempt to dominate the thoughts and soul of every neuroimager - in short, to ensure unity. And I can think of no greater emblem of unity than the normal distribution, also known as the Z-distribution - the end, the omega, the seal of all distributions. The most vicious of arguments, the most controversial of ideas are quickly resolved by appeal to this monolith; it towers over all research questions like a baleful phallus.

There will be no end to bantering about whether to abolish the arbitrary nature of p less than 0.05, but the bantering will be just that. The standard exists for a reason - it is clear, simple, understood by nearly everyone involved, and is as good a standard as any. A multitude of standards, a deviation from what has become so steeped in tradition, would be chaos, mayhem, a catastrophe. Again, best not to try.

I wish to clear away your childish notions that the Z-distribution is unfair or silly. On the contrary, it will dominate your research life until the day you die. Best to get along with it. The following SPM code will allow you to do just that - convert any output to the normal distribution, so that your results can be understood by anyone. Even by those who disagree, or wish to disagree, with the nature of this thing, will be forced to accept it. A shared Weltanschauung is a powerful thing. The most powerful.

=============

The following Matlab snippet was created by my adviser, Josh Brown. I take no credit for it, but I use it frequently, and believe others will get some use out of it. The calculators in each of the major statistical packages - SPM, AFNI, FSL - all do the same thing, and this is merely one application of it. The more one gets used to applying these transformations to achieve a desired result, the more intuitive it becomes to work with the data at any stage - registration, normalization, statistics, all.

%

% Usage: convert_spm_stat(conversion, infile, outfile, dof)

%

% This script uses a template .mat batch script object to

% convert an SPM (e.g. SPMT_0001.hdr,img) to a different statistical rep.

% (Requires matlab stats toolbox)

%

% Args:

% conversion -- one of 'TtoZ', 'ZtoT', '-log10PtoZ', 'Zto-log10P',

% 'PtoZ', 'ZtoP'

% infile -- input file stem (may include full path)

% outfile -- output file stem (may include full pasth)

% dof -- degrees of freedom

%

% Created by: Josh Brown

% Modification date: Aug. 3, 2007

% Modified: 8/21/2009 Adam Krawitz - Added '-log10PtoZ' and 'Zto-log10P'

% Modified: 2/10/2010 Adam Krawitz - Added 'PtoZ' and 'ZtoP'

function completed=convert_spm_stat(conversion, infile, outfile, dof)

old_dir = cd();

if strcmp(conversion,'TtoZ')

expval = ['norminv(tcdf(i1,' num2str(dof) '),0,1)'];

elseif strcmp(conversion,'ZtoT')

expval = ['tinv(normcdf(i1,0,1),' num2str(dof) ')'];

elseif strcmp(conversion,'-log10PtoZ')

expval = 'norminv(1-10.^(-i1),0,1)';

elseif strcmp(conversion,'Zto-log10P')

expval = '-log10(1-normcdf(i1,0,1))';

elseif strcmp(conversion,'PtoZ')

expval = 'norminv(1-i1,0,1)';

elseif strcmp(conversion,'ZtoP')

expval = '1-normcdf(i1,0,1)';

else

disp(['Conversion "' conversion '" unrecognized']);

return;

end

if isempty(outfile)

outfile = [infile '_' conversion];

end

if strcmp(conversion,'ZtoT')

expval = ['tinv(normcdf(i1,0,1),' num2str(dof) ')'];

elseif strcmp(conversion,'-log10PtoZ')

expval = 'norminv(1-10.^(-i1),0,1)';

end

%%% Now load into template and run

jobs{1}.util{1}.imcalc.input{1}=[infile '.img,1'];

jobs{1}.util{1}.imcalc.output=[outfile '.img'];

jobs{1}.util{1}.imcalc.expression=expval;

% run it:

spm_jobman('run', jobs);

cd(old_dir)

disp(['Conversion ' conversion ' complete.']);

completed = 1;

Assuming you have a T-map generated by SPM, and 25 subjects that went into the analysis, a sample command might be:

convert_spm_stat('TtoZ', 'spmT_0001', 'spmZ_0001', '24')

Note that the last argument is degrees of freedom, or N-1.