"It is a duty incumbent on upright and creditable men of all ranks who have performed anything noble or praiseworthy to record in their own words the events of their lives. But they should not undertake this honorable task until they are past the age of forty."

-Benvenuto Cellini, opening sentence of his Autobiography (c. 1558)

-Benvenuto Cellini, opening sentence of his Autobiography (c. 1558)

- Date within your cohort! Or not. Either way, you'll have a great time! Maybe.

- If you have more than ten things to say, you can make a longer list.

- If you rearrange the letters in the name "Spiro Agnew," you can spell "Grow A Penis." Really? Really.

- Think of teaching a class as a PG-13 movie: to keep the class titillated and interested, you're allowed to make slightly crude references without being explicit; and, if you want, you're entitled to say the f-word ("fuck") once during the semester.

- When they say, "Don't date your students until the class is over," they mean when the semester is over, not just when classtime is over.

- Virtually everyone who throws around the word "sustainable" has no idea what they're talking about, unless it's that water situation in California. Things are seriously f-worded over there.

- If you come into graduate school not knowing how to code, teach yourself. Only after getting frustrated and making no headway, only after you have exhausted every avenue of educating yourself - only then is it acceptable to find someone else to do it for you, and then take credit for it. You gotta at least try!

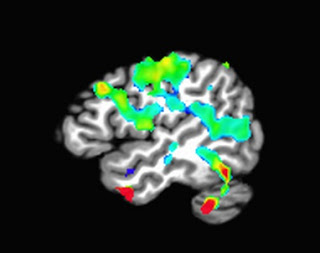

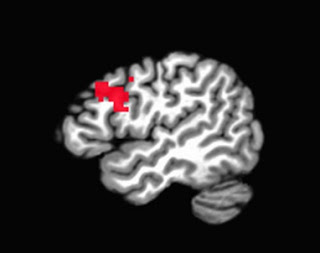

- You know you've been doing neuroimaging analysis for a long time when you don't think twice about labeling a directory "anal." Ditto for "GroupAnal."

- You know you've been in graduate school too long when you can remember the deidentification codes for all of your subjects, but not necessarily the names of all of your children.

- When you first start a blog in graduate school, everything you write is very proper and low-key, in the fear that you may offend one of your colleagues or a potential employer. Then after a while you loosen up. Then you tighten up again when you're on the job market. Then you get some kind of employment and you loosen up again. And so on.

- When I first started blogging, I figured that people would take the most interest in essays that I had taken considerable pains over, usually for several days or weeks. Judging from the amount of hits for each post, readers seem to vastly prefer satirical writings about juvenile things such as "the default poop network," and humorous neuroimaging journal titles with double entendres - silly crap I dashed off in a few minutes. Think about that.

- The whole academic enterprise is more social than anything. It sounds obvious and you will hear it everywhere, but you never appreciate it until you realize that you can't just piss off people arbitrarily and not suffer any consequences somewhere down the line. Likewise, if you are good to people and write them helpful blog posts and make them helpful tutorial videos, they are good to you, usually. Kind of like with everything else in life.

- If you get a good adviser, do not take that for granted. Make every effort to make that man's life easier by doing your duties, and by not breaking equipment or needlessly stabbing his other graduate students. By "good adviser" I mean someone who is considerate, generous with his time and resources, and clear about what you need to do to get your own career off the ground while giving you enough space to develop on your own. I had such an adviser, and that is a big part of the reason that the past five years of my life have also been the best five years. That, and the fact that I can rent cars on my own now.

Have you finished graduate school and are now in a slightly higher paying but still menial and depressing job, and would like to share your wisdom with the newer generation of young graduate students? Can you rent a car on your own now? Did you try the Spiro Agnew Anagram Challenge (SAAC)? Share your experiences in the comments section!